Chux's Notebook

This is a collection of my notes which I refer to on a regular basis. Hope it is also helpful for others stumbling by.

I think of these notes as mountaineering pegs. Often at the time of studying a particular paper or topic, the concepts are clear. Over time, however, only a vague impression remains, and I can no longer tussle with the issues. So these notes serve as pegs that I hope to use to re-scale an old hill, or at least scale it with less effort than starting from scratch.

Generally, I highlight main points like so, and put in-line code or numbers like so.

Built with mdBook.

About Me

I am working as a Lead Data Scientist at GovTech Singapore, on a team called JumpStart. We power search and recommender systems for Singapore Government products like the job searching portal MyCareersFuture or the CareersFinder tool to discover next steps for one's career.

My LinkedIn profile is here.

Current Focus

2025 Goals

Incorporate semantic IDs into a generalizable search and recommender system:

- Can function well with just a system prompt (guiding the overall goal of the system) and an item catalogue

- Handles a changing catalogue gracefully

- Good search and recommendation latency

- Cheap (runs on a T4 or equivalent)

Research Questions

-

Optimizing LLM explanations based on implicit feedback

- How to optimize an LLM to provide better recommendation explanations by fine-tuning on implicit feedback?

-

Replacing BM25

- How to design a search system that matches BM25 performance at cold start and gradually improves with more data, without dropping below BM25 performance?

-

Precise Retrieval

- The common two tower approach to embedding retrieval leaves much to be desired

- There is no natural score threshold at which items are deemed irrelevant. Traditionally, classifiers have a

0.5score cut-off. - Embedding retrieval tends to retrieve unrelated items. This is a well documented problem. For example,

Nike shoesretrievesAdidas Shoes. - Can we have embedding models that approximate AND / OR conditions that more naturally fit into the retrieval paradigm?

- There is no natural score threshold at which items are deemed irrelevant. Traditionally, classifiers have a

- The common two tower approach to embedding retrieval leaves much to be desired

-

Efficient learning of semantic IDs

- LLMs can learn Semantic IDs as part of their language and recommend and reason about items once they learn the "language" using Supervised Fine Tuning

- But the danger is catastrophic forgetting and losing capabilities as they get fine tuned in this way

- Is there a more "natural" way for LLMs to learn such semantic IDs?

- How do we handle a changing catalogue in real-time gracefully?

Thoughts

Short notes and ideas.

On ML Experiments

On using LLMs (e.g. Claude Code) to assist with ML experiments, especially on replicating existing results from papers.

There is much value in replicating ML experiment results from papers.

- Forces one to understand and implement the methods in the paper, no shortcuts

- Helps one understand how sensitive the results are to hyperparameters, data settings etc.

- Allows one to test out new methods or variations on the same task for an apples-to-apples comparison

Traditionally, this takes some time for each paper (days to weeks) depending on the complexity of the method, the compute cost, the expertise of the researcher who is performing the replication etc. There is also significant ambiguity in what defines a successful replication, since the paper may have inadvertently left out some implementation details, and the results might not be exactly replicable.

LLMs can make this process a lot faster, by:

- Performing requisite data cleaning

- Implementing the methods

- Monitoring the training and validation runs and fixing bugs along the way

This raises the question: what are the respective roles of the LLM and researcher in such a process? From my experience, I have tested out these loosely described paradigms:

- Iterative LLM-assisted coding

- Basically not too different from development before LLMs - the researcher codes from scratch and gets LLM to assist with each function, testing small pieces as we go along

- Spec-driven LLM development + post-hoc checking

- Researcher starts with detailed specifications file, detailing as much as possible about the data, method, hyperparameters, implementation process, evaluation criteria etc.

- LLM implements semi-autonomously, checking against the specifications

- After each phase, researcher reviews the code to understand it, refactor it, simplify it etc.

- Fully autonomous LLM

- Researcher provides the full paper to the LLM and high level specifications

- LLM implements end to end and submits results

I find that approach 1 produces the cleanest repo and understanding for the researcher. But it is also the slowest - significant time is spent debugging and doing the code edit -> run code loop manually. It's probably the approach that most researchers are using currently, to varying degrees of autonomy given to the LLM.

I could not get approach 3 to work. I tried running Claude Code in --dangerously-allow-permissions mode on runpod, and give it almost full autonomy in implementing and running the code. Some frustrating points:

- LLM kept using synthetic data even for the full run, even when explicitly spelled out that synthetic data is only for dev runs. When asked why it was using synthetic data, it said it failed to download the data. But managed to download the data on the very next instruction.

- LLM has no common sense. The paper detailed its method of generating synthetic queries on movielens using an LLM (since movielens only has interaction data) to train a search biencoder. Claude decided to generate synthetic queries of the form "What movie is

<title>?", despite being told to use an LLM to generate from the movie text. Such hard-coded queries obviously led to very high accuracies of> 99%, but Claude did not know to terminate and investigate. - LLM did not create meaningful evals. It mostly monitored train and validation loss, and even these were not very meaningful (it decided to sample only

500points for validation). The researcher needs to be explicit about specifying these.

Some reflections on testing out approach 3:

- Verification is hard in ML tasks. I tried providing the accuracy results from the paper to the LLM, asking it to benchmark against those figures to see if it is on the right track. The LLM was way off but did not know how to troubleshoot such problems. Normal test driven development also does not work for ML Tasks.

- Difficult to debug in fully autonomous setting. ML tasks are very sensitive to how the data is processed, hyperparameters etc. Even a small decision like left or right padding can significantly change results. Without diving into the code, I had no means of forming hypotheses about what was messing up the performance. LLMs are not well trained in this task of playing detective, and interrogating it did not yield much fruit.

- Unpleasant to understand code. Giving LLM full autonomy produces a lot of code that is intimidating to try to grok. I can probably do it with LLM assistance, but it produces the same daunting feeling as reviewing a large pull request. It also feels like unnecessary work as it entails spending time trying to understand lots of glue code.

Which leads me to approach 2. This is the approach I am currently testing out. The hope is that spec-driven development is a good middle ground. The researcher gets to use the specification to determine how far we want the LLM to sprint in each run, before we apply manual verification and understanding. This minimizes the chance of LLMs running completely off point and also makes it more palatable to understand a small piece of code each time.

Front-loading the development of specifications also forces the researcher to adopt systems-level thinking and design for the whole experiment. This hopefully helps us move up a level of abstraction and move along faster.

The main difficulties of approach 2 a-priori to me are:

- Designing the right places to pause for manual verification and understanding

- Getting the LLM to follow our specs closely and stop at the correct checkpoints

Will test it out and see how it goes!

Recent Trends in Search & Recommendations

This document summarizes some important ideas in both recommender systems and search systems that have emerged in the past few years. It aims to be a helpful read for someone trying to get acquainted with modern practices in these fields.

What we will cover:

- Embedding learning. Embedding learning for items and users is an upstream task that are used for downstream retrieval and ranking.

- Recommendations

- Search retrieval and ranking

Embedding Learning

Embedding learning is a foundational tool that powers all recommendation and search systems. Typically, users and items are represented by one or more embeddings that get fed into neural networks for prediction and recommendation tasks.

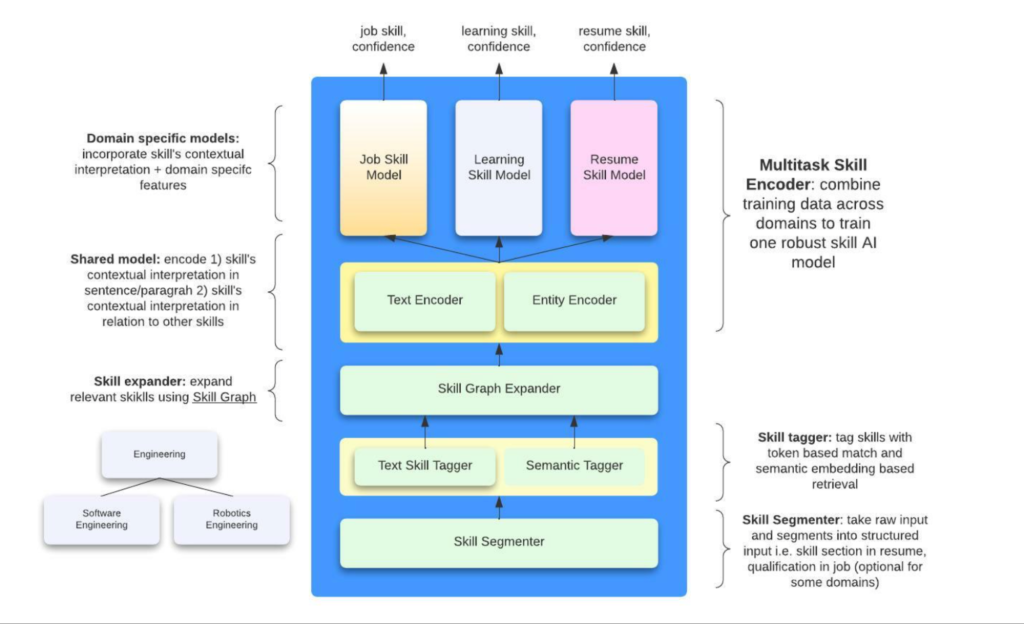

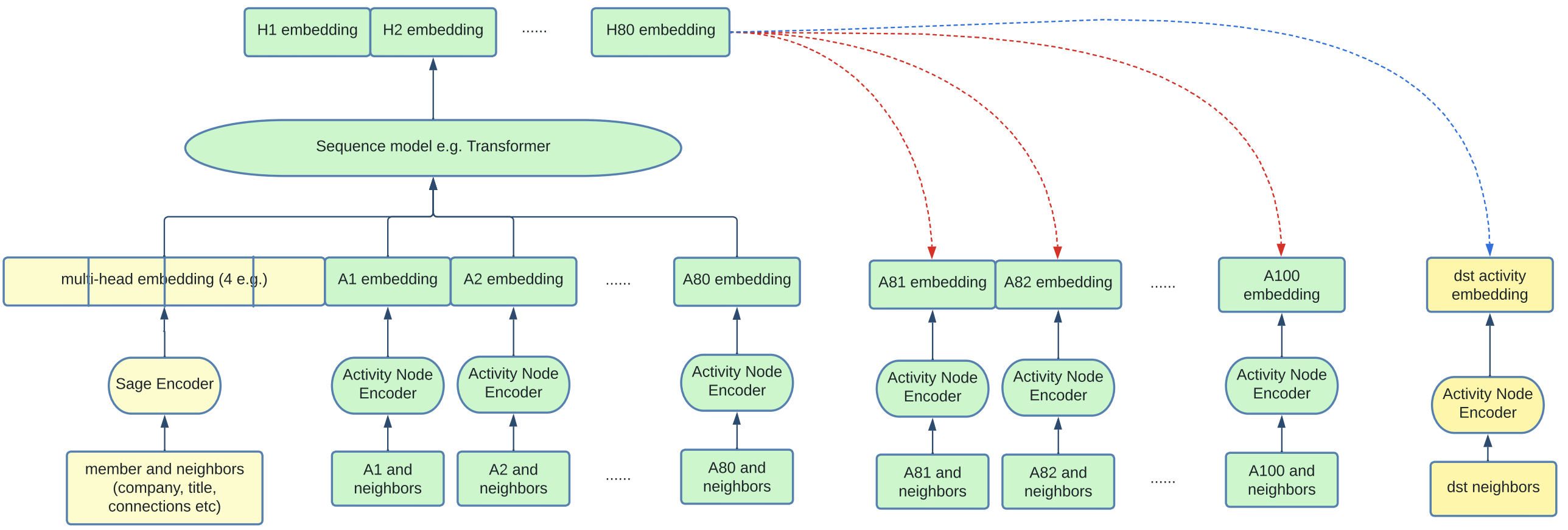

Whilst many off-the-shelf LLM embedding models exist today, the best performing embeddings are often context-specific. For example, from the perspective of a job-seeker, we may want the embeddings for Physical Education Teacher and Gym Trainer to be similar, as the focus is on similar job functions / skills. However, from the perspective of a HR personnel trying to do manpower planning, we may want Physical Education Teacher to be more similar to Executive at Singapore Sports School, since the focus is more on eligible pathways for internal rotation (btw, I made this example up). Hence, there is a need to effectively learn embedding models in a specific product setting. Lee 2020 describes the task like so:

(the goal is to) Learn embeddings that preserve the item-to-item similarities in a specific product context.

It is common practice to have an upstream pipeline to learn item embeddings that get re-used for multiple downstream tasks (let's call this a "universal" embedding). For example, YouTube uses a common set of video embeddings used by all its models [Lee 2020]. To adapt the embeddings to a specific task, the task model itself can always add a simple translation MLP layer to translate the universal embedding so that the embeddings can be more performant in each task setting.

Another way is to have each retrieval and ranker model learn its own embeddings for its specific task.

- However, this leads to redundancy to store and update each set of embeddings, often in some in-memory feature store, which is costly.

- This approach is also often not as performant as learning a universal set of embeddings and then adapting the embeddings to each task via a small learned MLP layer

- Training from a frozen set of universal embeddings is also much more compute efficient, as we do not need to forward/backward propagate into a potentially large language model that generates the embeddings from text or some other modality.

We can think of upstream training of an embedding model as analogous to the common practice of pre-training large language models in a semi-supervised manner on a large text corpus. The LLM is then fine-tuned for specific applications.

Although the performance of using multiple embeddings to represent an item has been proven to be more performant than using a single embedding (e.g. using ColBert), most companies seem to adopt a single embedding to reduce engineering complexity. For example, Pinterest briefly experimented with multiple embeddings per item but reverted to a single embedding to reduce costs.

There are 3 primary ways of embedding representation in the literature:

- ID-Based: This method maps an item's ID directly to an embedding.

- This is often used in a task-specific model, but not usually used for a universal embedding model shared by downstream tasks, as we cannot represent new items that have no user activity

- Content-Based: This is the most common approach, generating embeddings from an item's content, such as its text, images, or audio

- Textual content is usually passed into an LLM to generate embeddings

- For other modalities, task-agnostic preprocessing may be employed. For example, Lee 2020 sampling frames from a video and runs them through a ResNET model to create raw embeddings before they are fed into the main embedder model

- Graph-Based: This method can be seen as an extension of the content-based approach. It generates an embedding from the attributes of an item's neighbors in a network.

- This is particularly effective in social network products where network interactions are crucial, such as on LinkedIn, Pinterest, or Facebook

- While powerful, this method is more computationally expensive at both training and inference times because it requires access to the graph to compute embeddings

Embeddings are typically trained in a contrastive learning manner, which simply means that we want to encourage related items (e.g. videos frequently co-watched) to have high similarity scores and unrelated items (e.g. randomly sampled vidoe pairs) to have low scores.

Thus, we can think of contrastive learning as comprising 3 main components:

- Positive Sampling. How we mine for related items from data is a non-trivial, but an oft-neglected topic. Usually some behavioural statistics are used, e.g. Lee 2020 uses

videos frequently co-watched by usersto determine positive pairs. The guiding principle is to err on the side of being stricter in the selection of positive samples to minimize the appearance of false positives in the data (which are more harmful than false negatives).- In JumpStart, we have explored using Pointwise Mutual Information score to discard item pairs with low PMI score. Recall that the PMI score is computed as:

- Considering co-occurrence statistics focuses only on the numerator. Normalizing by the marginal probabilities of the items reduces the incidence of popular items being labelled as related when they are actually not

- In my experience, the bottom

10%of co-interacted items by pmi score can be safely discarded - Another subtle issue is that power users contribute disproportionately to positive pairs.

- If a given user interacts with items, then he/she generates item co-occurrence pairs

- The quadratic scaling in means that the item interactions from power users may dominate that of all other users, but these are also the least informative item pairs :'( as power users may have less specific item preferences

- Hence it is advisable to discard power users beyond a particular percentile to improve positive sampling

- Negative Sampling. Much of the research literature focuses on this issue. The reason is that as research data (e.g.

Movielens) is fixed, the positive pairs are usually pre-determined in the data. But the negative samples are usually not pre-specified in the data, so methods are devised to sample:- Random sampling. This is the simplest and most common approach: for a given anchor item, we sample negatives uniformly from the item catalogue, omitting the positive item(s) for the anchor

- Impressed but not positive. This is also a simple approach if we have access to item impression data. The impressed items are often hard negatives because they were deemed to be relevant by the existing recommendation system and surfaced to the user.

- The potential danger is that the model may only learn to distinguish negatives in a specific retrieval setting and is not robust to changes in the retrieval model

- Hard negative mining. Various other methods exist to mine for hard negatives, e.g. using BM25 score or embedding similarity. We discuss two ideas on this below in a bit more detail.

- Usually, it is best to mix negatives from all of the above so that the model learns to distinguish positives from a wide range of negatives

- Loss. Again, much ink has been spilt on this topic, but there are really only two main losses that we need to know:

- Triplet loss.

- Cross entropy (or InfoNCE) loss.

WORK IN PROGRESS

To improve training, two ideas are critical:

- Semi-hard Negative Mining: Originating from FaceNET, this technique addresses the problem of "false negatives" (items that are incorrectly labeled as negative) which can confuse the model. It focuses on training examples that are in a "goldilocks zone"—not too easy and not too hard. Lee (2020) demonstrated that mining for the hardest semi-hard negatives within a mini-batch is a computationally cheap and critical step for model performance.

- Smart Negative Sampling: Since random sampling often fails to find challenging negative examples, methods are devised to mine for them. This can involve using impression data, surrogate algorithms like BM25, or approximate-nearest-neighbor search. Lee (2020) also introduced using hierarchical clustering on a relational graph to find negatives from nearby clusters, which can be combined with semi-hard negative mining to prevent issues with false negatives.

From Matrix Factorization to Sequential Recommendations

Traditional matrix factorization methods learn a single, static user representation to predict their entire interaction history. This is problematic for users with diverse or evolving interests, as the model learns a "diluted" representation. While time-decayed ratings can help by giving more weight to recent interactions, the model still fails to learn the sequential nature of those interactions fully.

Sequential recommenders address this by mirroring the actual recommendation task: given a user's history up to time t, predict their next action at time t+1. The user's representation is therefore dynamic and based on their recent actions. There are three main approaches to this:

- Markov Approach: A simple but often effective method that uses the last interacted item as the primary feature to predict the next one.

- Pointwise Approach: This method involves "rolling back" features to what was available at the time of each action in the training data. While this explicitly computes every user-item interaction, it requires significant data-loading effort and careful feature engineering. Google chose this approach for its simplicity in distributed model training.

- Sequential Modelling Approach: This is analogous to language modeling, where the model learns to predict the next item in a sequence, learning from many items simultaneously.

Case Studies in Sequential Recommendations

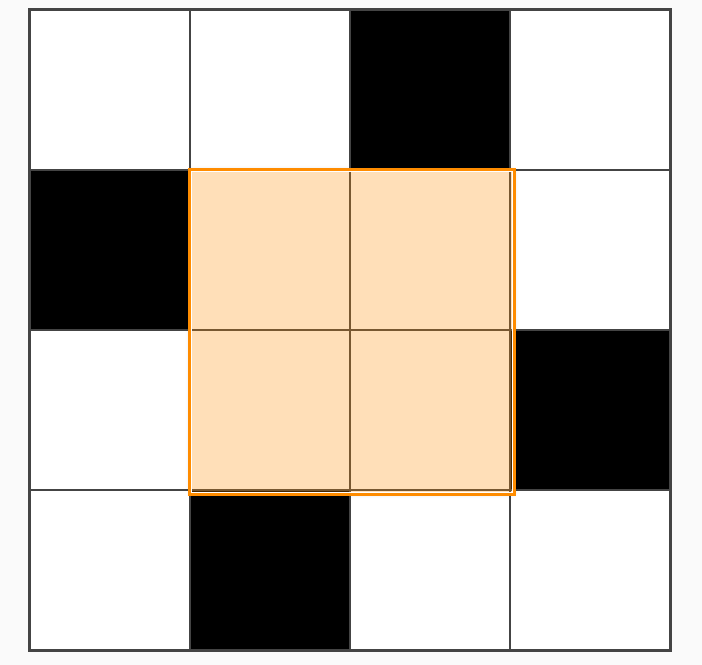

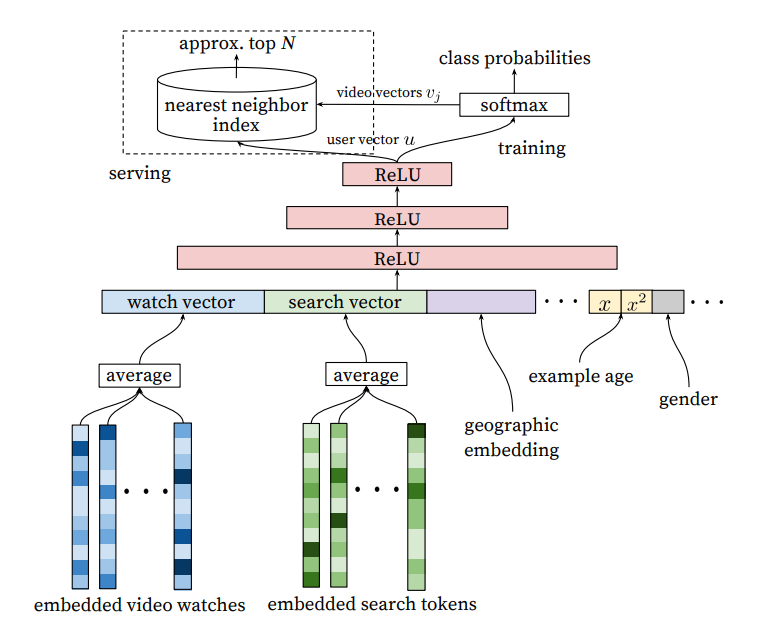

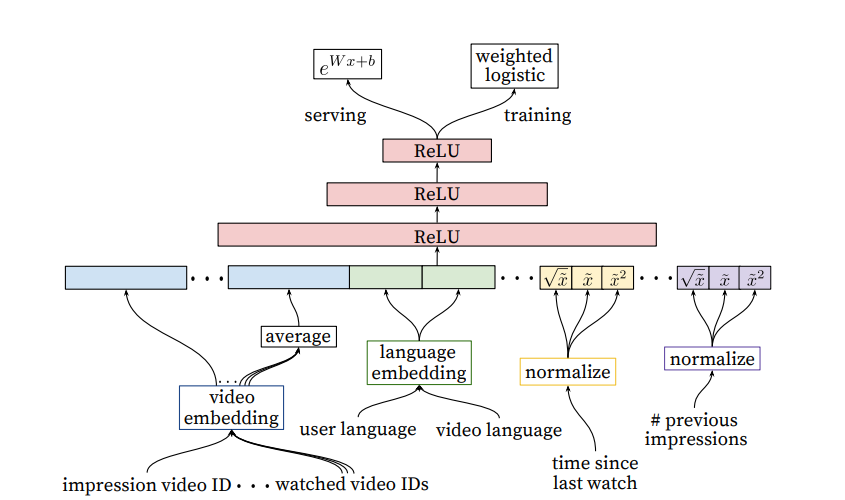

Covington (2016) - Pointwise Approach at YouTube:

YouTube's influential 2016 paper detailed a pointwise approach for both retrieval and ranking.

- Retrieval: The model used features like the average embedding of the last 50 video watches and search queries, along with user features like gender. A small MLP was trained with a sampled softmax loss to predict the next video watch. A crucial finding was that predicting the next watch was far more effective in online A/B tests than predicting a randomly held-out watch from the user's history.

[Diagram: Pointwise Approach for Retrieval - Covington 2016 architecture from slide 13]

- "Example Age" Feature: To capture the time-sensitive nature of video popularity, an "Example Age" feature was introduced. This simple feature encodes when an action took place, allowing the model to learn the "popularity lifecycle" of items, where popularity often spikes shortly after upload and then fades.

[Diagram: Chart showing Class Probability vs. Days Since Upload with and without the "Example Age" feature, from slide 15]

- Ranking: The ranking model was similar to the retrieval model but incorporated many more features, particularly those related to user-item historical interactions (e.g., "how many videos from this channel has the user watched?"). The paper noted that even simple transformations of features, like square roots, improved performance, highlighting the reality of manual feature engineering.

[Diagram: Pointwise Approach for Ranking - Covington 2016 architecture from slide 16]

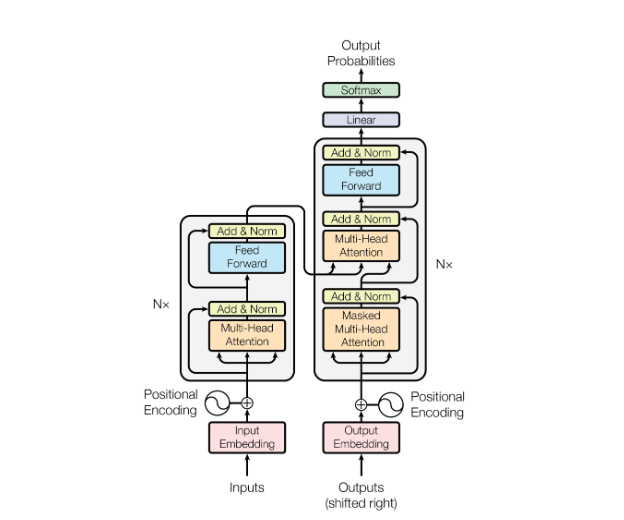

SASRec (2018) - The Transformer-Based Approach:

SASRec (Self-Attentive Sequential Recommendation) applied the transformer architecture to recommendations, treating a user's interaction history as a sequence of items to be predicted, much like words in a sentence.

- Architecture: Using causal masking, the transformer embedding at each time step encodes all previous interactions. The training loss is typically a binary cross-entropy loss comparing the dot product of the model's prediction with the target item embedding against a random negative sample. This approach significantly outperforms traditional matrix factorization methods.

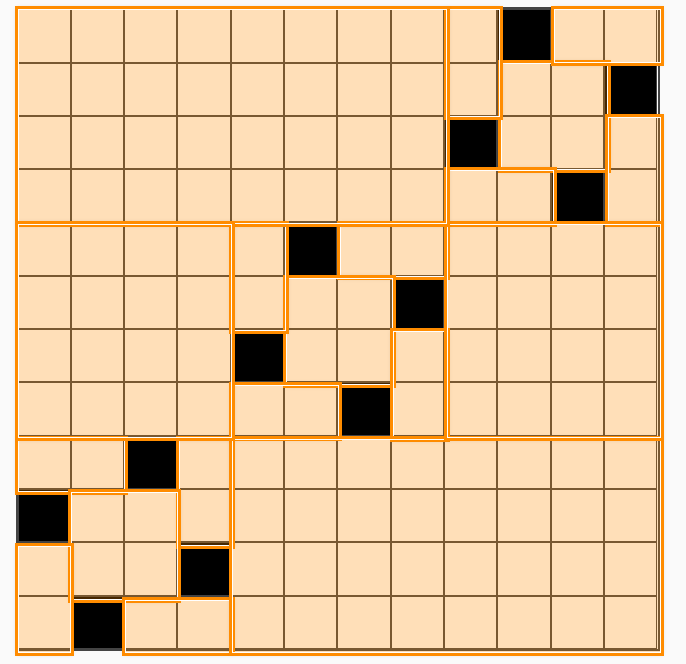

- Position Embeddings: Since transformers have no inherent notion of sequence, SASRec adds a learned position embedding to each item in the input sequence. This helps the model weigh recent items more heavily, as shown by visualizations of the attention matrix.

- Efficiency and Power: This paradigm is more efficient than the pointwise approach because it learns from N time steps simultaneously for each user. It also reduces the need for manual feature engineering, as the model can implicitly learn user-item interaction features.

[Diagram: Visualization of Average Attention Matrix from SASRec, with and without Positional Embeddings, from slide 21]

A subsequent paper, BERT4Rec (2019), proposed a "masked token prediction" task similar to BERT, allowing the model to use future items as input. While it initially appeared superior, Klenitskiy (2023) showed this was due to a difference in loss functions; when both use the same sampled softmax loss, SASRec consistently performs better and trains faster. The conclusion is that SASRec with sampled softmax loss is the current industry standard.

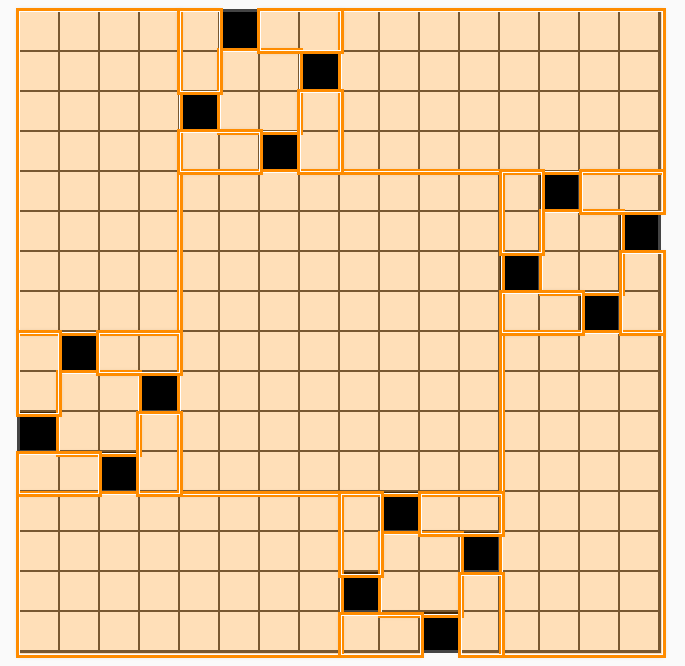

PinnerFormer (2022) - Long-Term Sequential Modeling at Pinterest:

PinnerFormer evolved from the need to switch from real-time user embedding computation to less costly daily batch jobs.

- Long-Term Loss: The key idea is that at each time step, instead of only predicting the very next item (like SASRec), the model predicts a random item from the user's future interactions over a longer window (e.g., 28 days). This creates more stable embeddings and surprisingly, PinnerFormer beats SASRec even when retrained in real-time.

- Advanced Features: PinnerFormer uses pre-computed PinSage (graph-based) embeddings as a base. It distinguishes between different action types (e.g., "Pin Save" vs. "Long Click") by concatenating a learned "action" embedding to the item embedding. It also heavily relies on time features, using Time2Vec to encode timestamps, noting a significant performance drop without them.

- Training Details: The model uses a combination of in-batch and random negatives with Log-Q correction, and ensures each user in a mini-batch is weighted equally to avoid bias from users with very long histories.

[Diagram: Illustration of PinnerFormer's "Long Term Loss" compared to SASRec from slide 25]

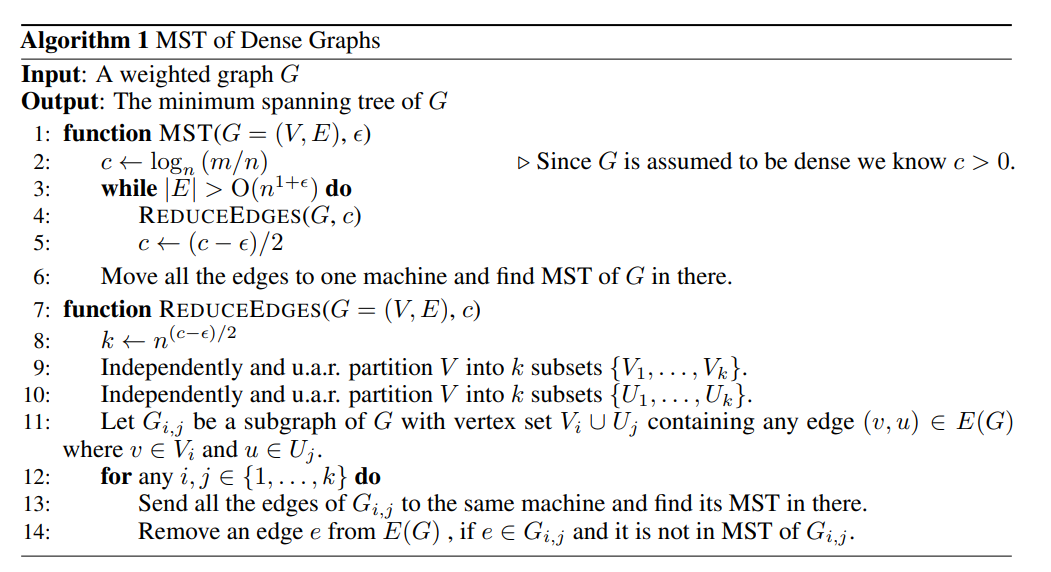

Multi-Task Learning in Recommendations

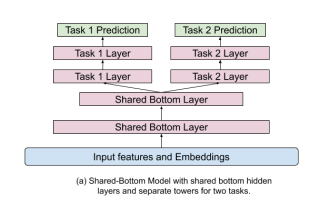

Recommendation systems often need to optimize for multiple objectives (e.g., clicks, saves, purchases). Several architectures address this, primarily for the pointwise approach:

- ESMM (2018): This model explicitly encodes the relationship that a conversion can only happen after a click (

P(convert) = P(click) * P(convert|click)). This allows the conversion model to benefit from the abundant click data while learning from the sparse conversion data.

[Diagram: ESMM architecture from slide 29]

- Shared-Bottom Architecture: A common approach where bottom layers of a neural network are shared across tasks, with separate "towers" or heads for each specific task prediction. The final recommendation is a manually tuned weighted average of the logits from each head.

[Diagram: Shared-Bottom Model architecture from slide 30]

- Mixture of Experts (MoE): An improvement over the shared-bottom model, MoE uses specialized "expert" layers. For each task, a gating network learns a weighted average over the outputs of these experts, allowing the model to modularize and handle potentially conflicting tasks more effectively.

[Diagram: Multi-gate Mixture-of-Expert (MoE) model architecture from slide 31]

- PinnerFormer's Approach: For sequential models, PinnerFormer took a simpler route, finding that treating all positive actions (repins, clicks, etc.) as equal signals was the best all-around strategy for their use case.

| Training Objective | 10s Closeup | 10s Click | Repin | All |

|---|---|---|---|---|

| 10s Closeup | 0.27 | 0.02 | 0.09 | 0.17 |

| 10s Click | 0.01 | 0.49 | 0.01 | 0.12 |

| Repin | 0.15 | 0.03 | 0.17 | 0.13 |

| Multi-task | 0.23 | 0.28 | 0.13 | 0.23 |

II. Trends in Search Systems

While related to recommendations, search systems have distinct challenges and characteristics in practice.

Search vs. Recommendation

- Relevance is Stricter: In search, there are objectively correct and incorrect results. Irrelevant results must not be surfaced, as they erode user trust.

- Latency is Critical: Users expect instant search results, making pre-computation of recommendations for a given query impossible.

- LLMs Have a Larger Impact: Search benefits more directly from advances in LLMs due to its text-centric nature and the overlap with fields like Information Retrieval and Question-Answering.

Search tasks exist on a spectrum from pure relevance (like academic Q&A) to pure personalization (which is essentially recommendation). E-commerce search lies in the middle, where relevance is key, but personalization plays a major role in user satisfaction given the large number of potentially relevant items for a broad query.

Academic vs. Industry Search

Academic research often focuses on Question-Answering (Q&A) datasets like MSMarco or TriviaQA, where queries are well-formed questions with a single correct answer. In this setting, pre-trained LLMs perform exceptionally well, and it is easier to beat traditional baselines like BM25.

Standard Academic Models:

- Ranking (Cross-Encoders): The standard is a cross-encoder architecture (often called MonoBERT), where a pre-trained language model like BERT takes a

[Query, Document]pair as input and outputs a relevance score. Later papers showed that using a sampled softmax loss with many random negatives is more effective than the original binary cross-entropy loss. As models scale, so does performance, with RankLlama showing stronger results than older BERT or T5-based rankers. A 2023 paper by Sun even showed that zero-shot prompting with ChatGPT, using a "permutation generation" method and sliding windows, can achieve state-of-the-art performance on Q&A tasks without any fine-tuning.

[Diagram: Standard Cross Encoder / MonoBERT architecture from slide 39]

| Ranking Model | MRR@10 for MSMarco |

|---|---|

| monoBERT | 37.2 |

| RankT5 | 43.4 |

| LLaMA2-8b | 44.9 |

- Retrieval (Two-Tower Models): The standard is Dense Passage Retrieval (DPR), which uses two separate encoders—one for the query and one for the document (passage). The model is trained with a sampled softmax loss to make the cosine similarity between a query and its positive document high, relative to negative documents. These negatives often include "in-batch" negatives (other positive documents in the same training batch) and "hard" negatives mined using BM25. To improve these retrievers, a common technique is distillation, where a powerful but slow cross-encoder (the "teacher") is used to train a faster two-tower model (the "student").

| Retrieval Model | MRR@10 for MSMarco |

|---|---|

| BM25 | 18.4 |

| ANCE (BERT-based) | 33.0 |

| LLaMA2-8b | 41.2 |

[Diagram: Illustration of a Mini Batch for Dense Passage Retrieval from slide 42]

Complexities of Industry Search Systems

Industry search systems are significantly more complex, needing to balance relevance, latency, and personalization.

Case Study: Pinterest Search (2024)

- Teacher-Student Distillation: Pinterest fine-tunes a large teacher LLM (Llama-3-8B) on 300k human-labeled query-pin pairs. To improve the teacher's performance, the text representation for each pin is enriched with its title, description, AI-generated image captions, high-engagement historical queries, and common board titles it's saved to.

| Model | Accuracy | AUROC 3+/4+/5+ |

|---|---|---|

| SearchSAGE | 0.503 | 0.878/0.845/0.826 |

| mBERT_base | 0.535 | 0.887/0.864/0.861 |

| T5_base | 0.569 | 0.909/0.884/0.886 |

| mDeBERTaV3_base | 0.580 | 0.917/0.892/0.895 |

| XLM-ROBERTalarge | 0.588 | 0.919/0.897/0.900 |

| Llama-3-8B | 0.602 | 0.930/0.904/0.908 |

- Scaling Up with Distilled Data: This powerful teacher model then generates labels for 30 million un-labeled data points. A small, fast student MLP model is then trained on this massive distilled dataset. This student model recovers up to 91% of the teacher's accuracy and, because the teacher was multilingual, the student model also learns to handle multiple languages effectively despite being trained only on English human-annotated data.

| Training Data | Accuracy | AUROC 3+/4+/5+ |

|---|---|---|

| 0.3M human labels | 0.484 | 0.850/0.817/0.794 |

| 6M distilled labels | 0.535 | 0.897/0.850/0.841 |

| 12M distilled labels | 0.539 | 0.903/0.856/0.847 |

| 30M distilled labels | 0.548 | 0.908/0.860/0.850 |

[Diagram: Teacher-Student distillation process at Pinterest from slide 47]

Case Study: Baidu Search (2021, 2023)

- Bootstrapping Labels with Weak Signals: Instead of training a large teacher model, Baidu uses a simple decision tree model trained on "weak relevance signals" (e.g., click-through rates, long-click rates, skip rates) to predict a "calibrated relevance score" for unlabeled data. Training a cross-encoder on this calibrated score was shown to be far more effective than training on raw clicks alone.

[Diagram: Baidu's decision tree model over weak relevance signals from slide 48]

- Query-Sensitive Summary: To avoid the latency of processing full documents, Baidu developed an algorithm to extract the most relevant sentences from a document based on word-match scores with the query. This summary is then used as input for the ranker, significantly boosting offline performance.

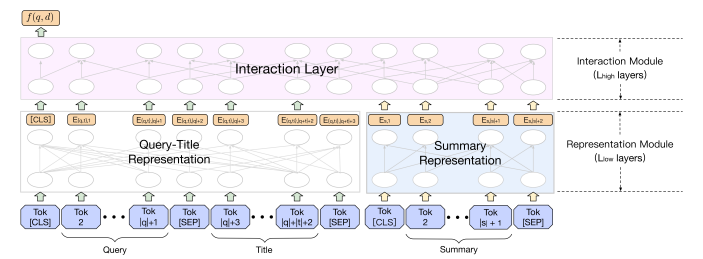

- Modular Attention for Speed: To accelerate their cross-encoder, Baidu uses a modular attention mechanism. Self-attention is first applied independently within the

[query-title]segment and thedocument summarysegment for several layers, before a few final layers of full cross-attention are applied. This structure reduces computation and increases inference speed by 30%.

[Diagram: Baidu's Modular Attention architecture from slide 50]

- From Relevance to Satisfaction: A 2023 paper from Baidu argued that relevance alone is insufficient and that models must optimize for user satisfaction. They engineered features for quality (e.g., number of ads), authority (e.g., PageRank), and recency, and even built a query analyzer to determine if a query was authority or recency-sensitive. These features, along with relevance, were used to train a model that boosted performance by 2.5% over a pure relevance model. Notably, numeric satisfaction features were simply normalized and appended directly into the text input for the LLM to process.

Case Study: Google DCNv2 (2020)

Google's Deep & Cross Network V2 (DCNv2) addresses a weakness in standard MLP rankers: they are not ideal for explicitly modeling feature interactions (e.g., user_is_interested_in_topic_X AND item_is_about_topic_X). DCNv2 introduces explicit "cross layers" that are designed to generate these feature crosses efficiently before feeding them into a standard deep network. This architecture provides performance gains over a standard MLP with the same number of parameters.

[Diagram: Google DCNv2 architecture with Cross Layers from slide 53]

Case Studies: Embedding-Based Retrieval (EBR) at Facebook and Taobao

EBR systems are used for semantic search but can struggle with relevance compared to traditional keyword-based lexical matching.

- Facebook's Hybrid Approach: Facebook integrates nearest-neighbor search directly into its in-house lexical search engine. A query can contain both standard lexical terms (e.g.,

location:seattle) and annnoperator that finds items within a certain embedding-distance radius. This ensures documents fulfill both lexical and semantic requirements. Facebook's query and document encoder towers use a mix of term-based (char-3-grams) and LLM-based representations. - Taobao's Relevance Filters: Taobao found their EBR system sometimes returned irrelevant but semantically close items (e.g., a search for "Nike shoes" returning "Adidas shoes"). Their solution was to apply explicit lexical boolean filters on top of the ANN search (e.g.,

ANN search + Brand:Nike + Product:Shoes). While this sacrifices some of the "fuzzy" semantic search capability, it guarantees relevance. Taobao also found that optimizing for engagement (clicks) does not guarantee relevance, and ultimately chose to accept slightly lower engagement to ensure a higher percentage of relevant results. Their query representation is enhanced by using cross-attention between the query and the user's historical interactions to personalize the query embedding.

| Experiment | Engagement % | Relevance % |

|---|---|---|

| Baseline | 85.6% | 71.2% |

| Baseline + personalization | 86.4% | 71.4% |

| Baseline + Lower temperature | 85.5% | 79.0% |

| Baseline + all | 84.7% | 80.0% |

LightGBM Memory

TLDR: Solutions for memory issues during training of a LightGBM model:

- Cast numeric values into

np.float32to save data space - Keep

num_leaves <= 100(or some reasonable number) - If feature dimension is large (e.g.

M >= 1000), trycolsample_bytree = 0.1, although this might not help too much if the bottleneck is during bin histogram construction (rather than the actual training) - If number of rows and features are both large (e.g.

N >= 1_000_000andM >= 1000, i.e.>= 4 GB) then the data itself is taking up a lot of memory. It would be worthwhile to put the data on disk and uselgb.Datasetby providing the file path as the data argument instead. Then, we should settwo_round=Truefor the train method params. The explanation for two round is rather unclear, but it should help with memory whenDatasetis loading from disk (rather than from anumpy.arrayin memory). For this option, I had some trouble getting it to work with categorical columns.

For more details can refer to the experiments below.

Experiments

I often run into memory issues running LightGBM. So here are some experiments to measure memory usage and understand how hyperparameters can affect memory usage.

The function of interest is the fit method for the learn to rank task.

import lightGBM as lgb

def f():

model = lgb.LGBMRanker(**params, objective="lambdarank")

model.fit(

X=data,

y=y,

group=groups,

)

The memory usage is measured using the memory_profiler module, which checks the memory usage at .1 second intervals. The maximum is then taken to represent the maximum memory usage of the fit function. We also take note of the size of the data itself (using data.nbytes) and subtract that away to get closer to the LightGBM memory usage. Do note that this memory profiling is not very rigorous, so the results are best for relative comparison within each experiment rather than across experiments.

from memory_profiler import memory_usage

def run(params):

mem_usage = memory_usage(f)

return max(mem_usage) / 1000 # GB

We set the default parameters as follows and generate the data this way. For the experiments below, the default parameters are used unless specified otherwise.

DEFAULT_PARAMS = {

"N": 200000, # number of instances

"M": 500, # feature dimension

"n_estimators": 100,

"num_leaves": 100,

"histogram_pool_size": -1,

}

data = np.random.randn(DEFAULT_PARAMS["N"], DEFAULT_PARAMS["M"])

groups = [20] * int(N / 20) # assume each session has 20 rows

y = np.random.randint(2, size=N) # randomly choose 0 or 1

Large num_leaves can get very memory intensive. We should not need too many leaves, so generally using num_leaves <= 100 and increasing the number of estimators seems sensible to Gme.

- num_leaves:

10, Maximum memory usage: 2.28 GB - 0.80 GB =1.48 GB - num_leaves:

100, Maximum memory usage: 2.52 GB - 0.80 GB =1.72 GB - num_leaves:

1000, Maximum memory usage: 4.04 GB - 0.80 GB =3.24 GB

Increasing n_estimators doesn't seem to raise memory much, but increases run time because each tree is fitted sequentially on the residual errors, so it cannot be parallelized.

- n_estimators:

10, Maximum memory usage: 2.28 GB - 0.80 GB =1.48 GB - n_estimators:

100, Maximum memory usage: 2.53 GB - 0.80 GB =1.73 GB - n_estimators:

1000, Maximum memory usage: 2.69 GB - 0.80 GB =1.89 GB

Increasing N increases memory sublinearly. It seems that the data size itself will be more of a problem than the increase in LightGBM memory usage as N increases. For extremely large N, we can also set the subsample parameter to use only a fraction of the training instances for each step (i.e. stochastic rather than full gradient descent). By default subsample=1.0.

- N:

1,000, Maximum memory usage: 0.38 GB - 0.00 GB =0.38 GB - N:

10,000, Maximum memory usage: 0.45 GB - 0.04 GB =0.41 GB - N:

100,000, Maximum memory usage: 1.46 GB - 0.40 GB =1.06 GB - N:

1,000,000, Maximum memory usage: 6.12 GB - 4.00 GB =2.12 GB - N:

2,000,000, Maximum memory usage: 10.48 GB - 8.00 GB =2.48 GB

In contrast to N, memory usage is quite sensitive to M, seems to increase linearly when M gets large. M=10,000 blows up my memory. I suppose this could be mitigated by setting colsample_bytree or colsample_bynode to sample a smaller subset.

- M:

100, Maximum memory usage: 2.08 GB - 0.16 GB =1.92 GB - M:

1000, Maximum memory usage: 4.92 GB - 1.60 GB =3.32 GB - M:

2000, Maximum memory usage: 9.69 GB - 3.20 GB =6.49 GB - M:

3000, Maximum memory usage: 14.35 GB - 4.80 GB =9.55 GB

To deal with the high memory usage of large M, we can set colsample_bytree which samples a subset of columns before training each tree. This will help to mitigate the memory usage. For this experiment, we set M=2000 to simulate data with high number of dimensions.

colsample_bytree: 0.1, Maximum memory usage: 8.60 GB - 3.20 GB =5.40 GBcolsample_bytree: 0.2, Maximum memory usage: 9.58 GB - 3.20 GB =6.38 GBcolsample_bytree: 0.4, Maximum memory usage: 10.06 GB - 3.20 GB =6.86 GBcolsample_bytree: 0.6, Maximum memory usage: 10.07 GB - 3.20 GB =6.87 GBcolsample_bytree: 0.8, Maximum memory usage: 10.46 GB - 3.20 GB =7.26 GB

In contrast, setting colsample_bynode does not help memory usage at all. Not too sure why, but I suppose since multiple nodes for the same tree can be split at the same time, the full feature set still has to be kept in memory.

colsample_bynode: 0.1, Maximum memory usage: 10.49 GB - 3.20 GB =7.29 GBcolsample_bynode: 0.2, Maximum memory usage: 10.49 GB - 3.20 GB =7.29 GBcolsample_bynode: 0.4, Maximum memory usage: 10.49 GB - 3.20 GB =7.29 GBcolsample_bynode: 0.6, Maximum memory usage: 10.49 GB - 3.20 GB =7.29 GBcolsample_bynode: 0.8, Maximum memory usage: 10.48 GB - 3.20 GB =7.28 GB

Tweaking boosting and data_sample_strategy don't seem to affect memory usage too much. Using dart seems to require a bit more memory than the traditional gbdt.

data_sample_strategy: bagging,boosting: gbdt, Maximum memory usage: 8.90 GB - 3.20 GB =5.70 GBdata_sample_strategy: goss,boosting: gbdt, Maximum memory usage: 9.58 GB - 3.20 GB =6.38 GBdata_sample_strategy: bagging,boosting: dart, Maximum memory usage: 9.81 GB - 3.20 GB =6.61 GBdata_sample_strategy: goss,boosting: dart, Maximum memory usage: 9.80 GB - 3.20 GB =6.60 GB

Another bottleneck we can tackle is to realize that LightGBM is a two-stage algorithm. In the first stage, LightGBM uses the full dataset to construct bins for each numeric variable (controlled by the max_bins argument) based on the optimal splits. In the second stage, these discretized bins are then used to map and split the numeric variables during the actual training process to contruct trees. From my understanding, the first stage cannot be chunked as it requires the full dataset, but the second stage can be chunked (as per any stochastic gradient descent algorithm) where a fraction of the dataset is loaded at each time. Hence, the real bottleneck appears to be the first stage, when the bins are constructed.

According to this thread, we can separate the memory usage between the two stages by using lgb.Dataset. First, we initialize the Dataset object and make sure to set free_raw_data=True (this tells it to free the original data array after the binning is done). Then, we trigger the actual dataset construction using dataset.construct(). Thereafter, we are free to delete the original data array to free up memory for the actual training. The following code illustrates this concept.

dataset = lgb.Dataset(data=data, label=y, group=groups, free_raw_data=True)

del data

dataset.construct()

lgb.train(params=params, train_set=dataset)

TF-IDF

Term Frequency - Inverse Document Frequency is a well known method for representing a document as a bag of words. For a given corpus , we compute the IDF value for each word by taking , with denoting the number of documents in containing the word . The document is represented by a vector of length corresponding to the number of unique words in . Each element of the vector will be a tf-idf value for the word, i.e. , where represents the term frequency of the word in document . Sometimes, we may l1 or l2 normalize the tf-idf vector so that the dot product between document vectors represents the cosine similarity between them.

Bayesian Smoothing

We may want to apply some bayesian smoothing to the terms to avoid spurious matches. For example, suppose that a rare word appears only in documents and in the entire corpus just by random chance. The will be a large value, and hence documents and will have a high cosine similarity just because of this rare word.

For the specific setting I am considering, we can deal with this problem using bayesian smoothing. The setting is as follows:

- Each document represents a job, and each job is tagged to an occupation

- An occupation can have one or more jobs tagged to it

- We wish to represent each occupation as a TF-IDF vector of words

To apply bayesian smoothing to this scenario, notice that we only need to smooth the term frequencies . Since the IDF values are estimated across the whole corpus, we can assume that those are relatively reliable. And since term frequencies are counts, we can use a poisson random variable to represent them. See reference for a primer on the gamma-poisson bayesian inference model.

Specifically, we assume that is the poisson parameter that dictates the term frequency of in any document belonging to , i.e. . We treat the observed term frequency for each document belonging to as a data sample to update our beliefs about . We start with an uninformative gamma prior for , and obtain the MAP estimate for as below, with denoting the number of documents that belong to occupation .

We can thus use this formula to obtain posterior estimates for each . One possible choice of the prior parameters and is to set to be the mean term frequency for word per document in the entire corpus, and to set . This prior corresponds to following a gamma distribution with mean and variance , which seems to be a reasonable choice that can be overrided by a reasonable amount of data.

The posterior variance, which may also be helpful in quantifying the confidence of this estimate, is:

Finally, after obtaining the posterior estimates for each , we can just use them as our term frequencies and multiply them by the IDF values as per normal. We can also apply l1 or l2 normalization thereafter to the tf-idf vectors. This method should produce tf-idf vectors that are more robust to the original problem of spurious matches.

For illustration, for a very rare word , will be a low value close to 0 (say 0.01). Suppose we were to observe number of new documents, each containing one occurrence of word . Then the posterior estimate of will update as follows:

| n | |

|---|---|

| 1 | 0.005 |

| 2 | 0.337 |

| 3 | 0.503 |

| 4 | 0.602 |

| 20 | 0.905 |

As desired, the estimate for starts off at a very small value and gradually approaches the true value . This will help mitigate the effect of spurious matches. If we desire for the update to match the data more quickly, we can simply scale and down by some factor, e.g. now and . Then we have:

| n | |

|---|---|

| 1 | 0.001 |

| 2 | 0.455 |

| 3 | 0.626 |

| 4 | 0.715 |

| 20 | 0.941 |

As a final detail, note that the update formula can result in negative estimates if and . The small negative value is probably not a big problem for our purposes, but we could also resolve it by setting the negative values to zero if desired.

Cross Encoders

Cross encoder is a type of model architecture used for re-ranking a relatively small set of candidates (typically 1,000 or less) with great precision. In the Question-Answering or machine reading literature, typically the task involves finding the top matching documents to a given query. A typical task is the MS MARCO dataset, which seeks to find the top documents that are relevant to a given bing query.

Basic Setup

Typically, the base model is some kind of pre-trained BERT model, and a classification head is added on top to output a probability. Each (query, document) pair is concatenated with [SEP] token in-between to form a sentence. The sentence is fed into the classification model to output a probability. The model is trained using binary cross-entropy loss against 0,1 labels (irrelevant or relevant).

This is the setup used by <Nogeuira 2019>, possibly the first paper to propose the cross encoder. Some specifics for their setup:

- The query is truncated to max

64 tokens, while the passage is truncated such that the concatenated sentence is max512 tokens. They use the[CLS]embedding as input to a classifier head. - The loss for a single query is formulated as below. refers to the score from the classifier model, refers to the documents that are relevant, and refers to documents in the top

1,000retrieved by BM25 that are not relevant. Note that this results in a very imbalanced dataset.

- The model is fine-tuned with a batch size of 128 sentence pairs for 100k batches.

As opposed to bi-encoders (or dual encoders), which take a dot product between the query embedding and the document embedding, we cannot pre-compute embeddings in the cross encoder setting, because the cross encoder requires a forward pass on the concatenated (query, document) pair. Due to the bi-directional attention on the full concatenated sentence, we need the full sentence before we can compute the score, which requires the query that we only see at inference time. Hence, the cross encoder is limited to reranking a small set of candidates as it requires a full forward pass on each query, candidate_document pair separately.

Contrastive Loss

The vanilla binary cross entropy loss proposed above may be thought of as a

Thus <Gao 2021> proposes the Local Contrastive Estimation loss. For a given query q, a positive document is selected, and a few negative documents are sampled using a retriever (e.g. BM25). The contrastive loss then seeks to maximize the softmax probability of the positive document against the negative documents.

It is confirmed in multiple experiments in Gao 2021 and Pradeep 2022 that LCE consistently out-performs point-wise cross entropy loss. Furthermore, the performance consistently improves as the number of negative documents per query (i.e. ) increases. In Gao 2021, up to 7 negatives (i.e. batch size of 8) were used. Pradeep 2022 shows that increasing the batch size up to 32 continues to yield gains consistently (albeit diminishingly).

Other details

Pradeep 2022's experiments show that using a stronger retrieval model (a ColBERT-based model) during inference generates slight gains in final performance (as opposed to BM25). Although Gao 2021 argues that it is also important to use the same retrieval model during model training (so that the cross encoder sees the same distribution of negatives during training and inference), Pradeep 2022 argues that the alignment is not as important as the stronger retrieval performance during inference.

References

- Nogueira 2019 - Passage Re-ranking with BERT

- Gao 2021 - Rethink Training of BERT Rerankers in Multi-Stage Retrieval Pipeline

- Pradeep 2022 - A Bag of Tricks for Improving Cross Encoder Effectiveness

- Huggingface reranker training

SentenceTransformers

SentenceTransformers is a useful library for training various BERT-based models, including two-tower embedding models and cross encoder reranking models.

Cross Encoder

SentenceTransformers v4.0 updated their cross encoder training interface (see the v4.0 blogpost). Here we try to follow the key components for cross encoder training using their API.

The main class for training is CrossEncoderTrainer. We rely on a Huggingface datasets.Dataset class to provide training and validation data. CrossEncoderTrainer requires that the dataset format matches the chosen loss function.

The loss overview page provides a summary of cross encoder losses and the required dataset format. In general for cross encoder training, we have two sentences which are either positively or negatively related to each other. Which loss function we choose depends on the specific dataset format we possess.

BinaryCrossEntropyLoss

Use this loss if we have inputs in the form of (sentence_A, sentence_B) and a label of either 0: negative, 1: positive or a float score between 0-1. In the huggingface dataset, we would need to ensure that the label column is named label or score, and have two other input columns corresponding to sentence_A and sentence_B. For sentence_transformers package in general, order of columns matter, so we should set it to sentence_A, sentence_B, label.

Inspecting the source code would show that each sentence pair is tokenized and encoded by the cross encoder model. The cross encoder must output a single logit (i.e. initialized with num_labels=1). Thus we get a prediction vector of dim batch_size. The torch.nn.BCEWithLogitsLoss is then used to compute the binary cross entropy loss of the prediction logits against the actual labels , according to the standard bce loss:

This is a simple and effective loss. The user should ensure that the labels are well distributed (between and ) without any severe class imbalance.

CrossEntropyLoss

The CrossEntropyLoss is used for a classification task, where for a given input sentence pair (sentence_A, sentence_B), the label is a class. For example, we may have data where each sentence pair is tagged to a 1-5 rating scale. We need to instatiate the CrossEncoder class with num_labels=num_classes for this use case. This creates a prediction head for each class.

Looking at the source code, we see that this loss simply takes the prediction logits from the model (of dimension num_labels) and computes the torch.nn.CrossEntropyLoss against the actual labels.

Note that the cross entropy loss takes the following form. Given num_labels=C and logits of , where the correct label is index , we have:

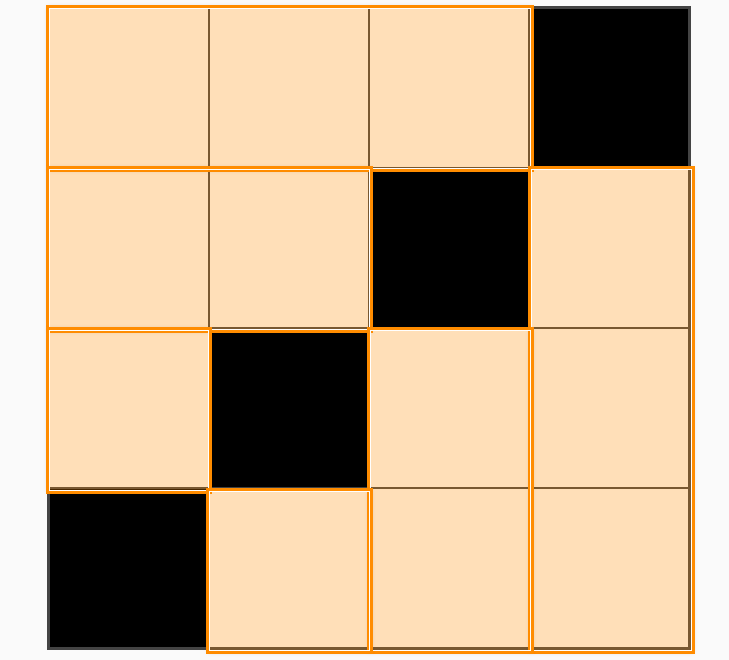

MultipleNegativesRankingLoss

This is basically InfoNCE loss or in-batch negatives loss. The inputs to this loss can take the following forms:

(anchor, positive)sentences(anchor, positive, negative)sentences(anchor, positive, negative_1, ..., negative_n)sentences

The documentation page has a nice description of what this loss does: Given an anchor, assign the highest similarity to the corresponding positive document out of every single positive and negative document in the batch.

Diving into the source code:

- The inputs are

list[list[str]], where the outer list corresponds to[anchor, positive, *negatives]. The inner list corresponds to the batch size. scoresof dimension(batch_size)are computed for each anchor, positive pairget_in_batch_negativesis then called to mine negatives for each anchor.- candidates (positive and negatives) are extracted at

inputs[1:]and flattened into a long list - A mask is created such that for each anchor, all the matching positive and negative candidates are masked out (not participating)

- The matching negatives do not participate because they will be added later on

- Amongst the remaining negatives,

torch.multinomialis used to selectself.num_negativesnumber of documents per anchor at random self.num_negativesdefaults to4- These randomly selected negative texts are then returned as

list[str]

- candidates (positive and negatives) are extracted at

- For each negative in num_negatives mined in-batch negatives:

scoreof dimension(batch_size)is computed for the anchor, negative pair- The result is appended to

scores

- Similarly, for each hard matching negative:

scoreof dimension(batch_size)is computed for the anchor, hard negative pair- The result is appended to

scores

Now scores is passed into calculate_loss:

- Recall that

scoresis a list of tensors where the outer list is size1 + num_rand_negatives + num_hard_negatives, and each tensor is of dimensionbatch_size - Thus

torch.cat+tranposeis called to make it(batch_size, 1 + num_rand_negatives + num_hard_negatives) - Note that for each row, the first column corresponds to the positive document

- Hence the

labelsmay be created astorch.zeros(batch_size) - Then

torch.nn.CrossEntropyLoss()(scores, labels)may be called to get the loss

This sums up the loss computation for MultipleNegativesRankingLoss.

CachedMultipleNegativesRankingLoss

Collaborative Filtering

Collaborative filtering is typically done with implicit feedback in the RecSys setting. In this setting, interactions are often very sparse. Most of the time, only positive signals are recorded, but a non-interaction could either mean (i) user dislikes the item or (ii) the user was not exposed to the item. Hence, we cannot use algorithms like SVD which assume no interactions as irrelevance.

A useful repository is https://github.com/recommenders-team/recommenders.

A generic and fairly common architecture for the collaborative filtering model is to embed each user and item into separate fixed size vectors, and use the cosine similarity between the vectors to represent a score. This score is fed into a cross entropy loss against the labelled relevance of user to item to train the embeddings.

Setup

Let and denote the dimensional embedding vector for user and item . Let the similarity function be which is typically , and distance function which is typically . Then some common loss functions may be denoted as below.

Pointwise Losses are typically low-performing. For a given (u, i) pair, pointwise losses assume the presence of a 0, 1 label for relevance, and tries to predict it. The typical pointwise loss is Binary Cross Entropy, which may be expressed as:

Pairwise Losses assume the presence of training triplets (u, i, j) which correspond to user, positive item and negative item. A typical pairwise loss is Bayesian Personalized Ranking, as follows:

Weighted Matrix Factorization

This describes the Cornac implementation of WMF. The code:

Let describe a rating matrix of users and items. For simplicity, we may restrict . Given a user embedding matrix and item embedding matrix , WMF computes the similarity score as the dot product .

The general loss function is:

The idea is to simply take the squared error from the true ratings matrix as our loss, but apply a lower weightage to elements in the rating matrix where the rating is zero (as these are usually unobserved / implicit negatives that we are less confident about). Usually b is set to 0.01. Regularization is performed on the user and item embedding matrices, with as hyperparameters to adjust the strength of regularization.

For cornac, this loss is adapted to the mini batch setting. Specifically, the algorithm is:

- Draw a mini batch (default:

B = 128) of items but use all the users - Compute the model predictions

- Compute squared error

- Multiply matrix of weights (either

1for positive ratings orbfor negative ratings) element-wise with

Note that Adam optimizer is used, and gradients are clipped between [-5, 5].

Bilateral Variational Autoencoder (BiVAE)

Recommenders BiVAE Deep Dive BiVAE Paper

A working implementation of BiVAE is available on Cornac.

A variational autoencoder improves over traditional linear matrix factorization methods by using non-linearity and a probabilistic formulation. Given a user, the autoencoder encodes the data representing the entity into a vector in some latent space. A decoder then takes the vector in the latent space and decodes it into something close to the original data.

The difference between VAE and a regular autoencoder is that it doesn't learn a fixed vector representation, but rather a probability distribution in the latent space. This allows it to model noisy, sparse interaction data better.

Splitting

recommenders uses a few different types of data splitting:

- Stratified spltting.

Evaluation

Evaluation is a non-trivial topic for recsys, and different approaches measure different things. Suppose we have a dataset of user-item interactions with a timestamp.

Random splitting simply takes a random split of say 75% for train and 25% for test. The problems with this approach:

- No guarantee of overlap in users across train and test. If a user does not appear in the train set, it is not possible to recommend items for him/her in the test set.

- Chronological overlap between train and test set, leading to data leakage issues.

Stratified splitting addresses the user overlap issue by ensuring that the number of rows per user in the train and test set are approximately 75% and 25% of the number of rows in the original data respectively. This ensures that we have sufficient training and test data for each user, so that the collaborative filtering algorithm has a fair chance of recommending items for each user.

However, stratified splitting still involves randomly assigning rows for each user into the train and test set, which does not address the chronological overlap issue. Temporal stratified splitting addresses this issue by assigning the 75% and 25% of train and test data based on chronological order. In other words, the oldest 75% of data for each user is assigned to the train set.

The extreme version of temporal stratified splitting is leave last out splitting, in which all but the latest row for each user is put into the train set. This is suitable for settings where the task is to predict the very next action which the user will take (e.g. which song will the user listen to next).

Note that temporal stratified splitting may potentially introduce temporal overlap between the train and test sets across users. That is, the train set period for user A may potentially overlap with the test set period for user B. Hence, if there are strong concerns with temporal effects in the dataset, we may need to be mindful of this.

Global temporal splitting addresses this issue by assigning the oldest 75% of data across all users to the train set. This addresses the data leakage issue and more closely resembles actual production setting. However, there is no guarantee on the amount of train/test data for each user. Hence we may need to drop rows where there exists test data for user A but no corresponding train data due to the global temporal cutoff.

AB Testing

References

-

General:

-

On Bayesian AB Testing:

Examples

These summaries are based on reading PostHog's article on AB testing and studying the ones of interest further.

AB Testing at Airbnb

AB Testing is crucial because the outside world often has a larger effect on metrics than product changes. Factors like seasonality, economy can cause metrics to fluctuate greatly, hence a controlled experiment is necessary to control for external factors and isolate the effects of the product change.

Airbnb has a complex booking flow of search -> contact -> accept -> book. While they track the AB impact of each stage, the main metric of interest is the search to book metric.

One pitfall is stopping the AB Test too early. Airbnb noticed that their AB tests tend to follow a pattern of hitting significance early on but returning to neutral when the test has run its full course. This is a phenomenon known as peeking, which is to repeatedly examine an ongoing AB test. It makes it much more likely to find a significant effect when there isn't, since we are doing a statistical test each time we peek. For Airbnb, they hypothesize that this is also a phenomenon caused by the long lead time it takes from search -> book, such that early converters have a disproportionately large influence at the beginning of the experiment.

The natural solution to this problem is to conduct power analysis and determine the desired sample size prior to the experiment. However, Airbnb runs multiple AB tests at the same time, hence they required an automatic way to track ongoing AB tests and report when significance has been reached. Their solution back then was to create a dynamic p-value graph. The idea is that on day 1 of the experiment, we would require a very low p-value to declare success. As time goes on and more samples are collected, we can gradually increase the p-value until it hits 5%. The shape of this graph is unique to their platform and they did extensive simulations to create it, so this solution is not very generalizable.

Another pitfall was assuming that the system is working. After running an AB test for shifting from more words to more pictures, the initial result was neutral. However, they investigated and found that most browsers had a significant positive effect except for Internet Explorer. It turned out that the change had some breaking effect on older IE browsers. After fixing that, the overall result became positive. Hence some investigation is warranted when the AB test results are unintuitive. However, one needs to be cautious of multiple-testing when investigating breakdowns, since we are conducting multiple statistical tests.

Airbnb has a strong AB testing culture - only 8% of AB tests are successful (see Ron Kohavi's LinkedIn Post).

AB Testing at Monzo

Monzo has a big AB testing culture - they ran 21 experiments in 6 months. Monzo has a bottom-up AB testing culture where anyone can write a proposal on Notion. Some of the best ideas come from the customer operations staff working on the frontlines. A proposal comprises the following sections:

- What problem are you trying to solve?

- Why should we solve it?

- How should we solve it (optional)?

- What is the ideal way to solve this problem (optional)?

Many proposals end up becoming AB experiments. Monzo prefers launching pellets rather than cannonballs. This means that each experiment comprises small changes, is quick to build, and helps the team learn quickly.

AB Testing at Convoy

Convoy argues that bayesian AB testing is more efficient than frequentist AB testing and allows them to push out product changes faster while still controlling risk.

The argument against frequentist AB testing is as follows. Under traditional AB testing, we define a null hypothesis using the control group (call it A), and declare a treatment (call it B) as successful if the treatment value has a significant p-value, i.e. it falls outside of the range of reasonable values under the null. Based on power analysis and an expected effect size, we predetermine the necessary sample size to achieve sufficient power, and once this sample size is reached, we have a binary success or failure result based on the p-value.

Convoy argues that this approach is safe but inefficient. This is because prior to the sample size being reached, we do not have a principled way of saying anything about the effectiveness of the treatment, even if it is performing better. Furthermore, frequentist AB testing gives us a binary result, but it does not quantify the size of the difference. Specifically, an insignificant test where E(A)=10%, E(B)=11% is quite different from E(A)=15%, E(B)=10%. For the former case, one can argue for launching B even if the p-value did not hit significance, whereas for the latter we should definitely not launch.

Bayesian analysis comes in to make the above intuition concrete. Suppose we are interested in the clickthrough rate (CTR) of variant A vs B. Bayesian analysis provides a distribution of the average CTR for each variant A, B at any point of the AB test, based on the results that it has seen thus far. These posterior distributions reflect both the mean of the data (how far apart is from ) and the variance of the data (how spread out the distributions are), allowing us to quantify how much we stand to gain if we were to pick either variant A or B at this point in time.

Concretely, they define a loss function as follows. Let and be the unobserved true CTR for variants A and B respectively, and let the variable denote which variant we decide to choose. Then our loss for choosing each variant can be expressed as:

In other words, the loss above expresses how much we stand to lose by picking the unfortunately wrong variant based on incomplete evidence at this point in time. Of course, we do not know the true values of and , so we need to estimate the loss using our posterior distributions which we computed from data. We then compute the expected loss based on the posterior distributions , as such:

Here, is the joint posterior distribution, which I believe we can obtain by multiplying the two independent posterior distributions , together. We can also perform random draws from the posterior distributions to estimate this statistic. Finally, we make a decision by choosing the variant that dips below a certain loss threshold, which is usually a very small value.

The appeal of the bayesian approach is two-fold:

- It allows us to make faster decisions. Suppose an experiment is wildly successful, and it is clear within a day that variant B is better. Bayesian analysis will be able to reveal this result, whereas frequentist analysis will tell us to wait longer (since we estimated the effect size to be smaller).

- It allows us to control risk. Since we are making decisions based on minimizing risk (supposing we had picked the poorer variant), we may be sure that even if we are wrong, it will not severely degrade our product. So supposing that there is no significant engineering cost between variant A and B, we can more rapidly roll out new iterations with the assurance that on average our product will be improving.

Power Analysis

Reference: Probing into Minimum Sample Size by Mintao Wei

How to determine the minimum sample size required to achieve a certain significance level and power desired?

The following table helps us understand how type I and type II errors come into play:

| Null Hypothesis: A is True | Alternate Hypothesis: B is True | |

|---|---|---|

| Reject A | Type I Error | Good statistical power |

| Accept A | Good significance level | Type II Error |

Type I Error refers to rejecting the null hypothesis when it is actually true, e.g. when we think that an AA test has significant difference. In short, it means we were too eager to deploy a poor variant. This should happen with probability , which is the significance level which we set (typically 5%). We have a better handle on type I error because the baseline conversion rate is typically known prior to an experiment.

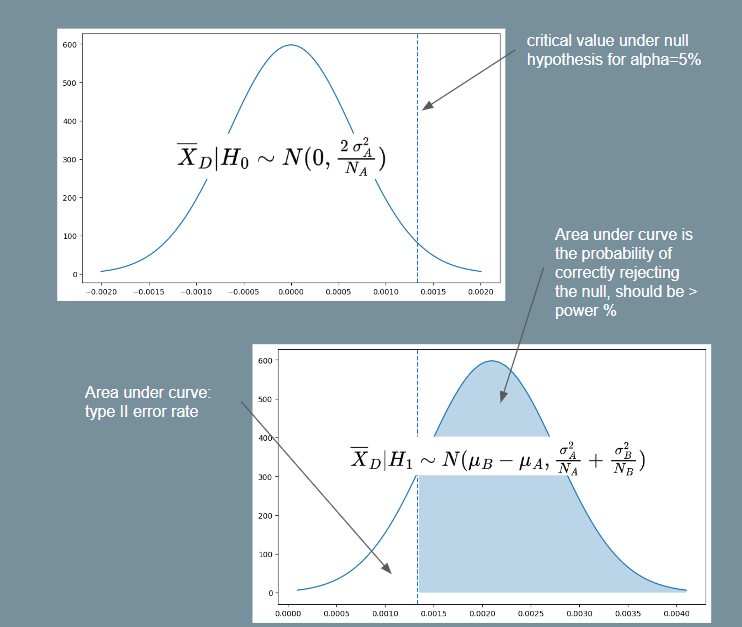

Type II Error refers to failing to reject the null hypothesis when the alternate is actually true, i.e. we failed to get a significant effect on an improvement that is known to be better. In short, we were too conservative and failed to deploy a winning variant. In order to reason about type II error, we need to make a guess on what is the distribution of test variant B. Typically, this is done by assuming a minimum effect we wish to detect, and setting , and re-using the standard deviation from A. With these assumptions in place, we use to determine the type II error that should only occur with probability (typically 20%). Note that since is the minimum effect we wish to detect, if the actual effect turned out to be larger, the type II error can only be smaller than our desired amount, which is ok.

Now we can derive the formula for the minimum sample size required to achieve the desired levels of type I and type II error respectively.

Let us define the baseline conversion rate as , and the minimum relative detectable effect rate as . Consequently, the minimum detectable delta is . Let the desired power level be , and the desired significance level as . Assume the scenario where we are running an AA or AB test with two variants of sample size each.

Firstly, we write down the distribution of the sample mean difference supposing we knew the true population means and standard deviations. Let and . Note that may have arbitrary distributions, e.g. they could measure proportions, revenue etc.

Under the central limit theorem, the sample means will be distributed like so with samples: , . Importantly, the difference of the sample means will have the distribution below. Note that we add the variances together because for any two independent random variables .

Now we can start working from the desired levels to the minimum sample size. We need to ensure that both objectives below are achieved with our sample size :

- Assuming null hypothesis to be true, ensure that type I error .

- Assuming alternate hypothesis to be true, ensure that type II error .

Let us define some notation first.

- Let denote the critical value under the standard normal distribution such that . This is basically the

scipy.stats.norm.ppffunction, e.g. . - We also want to denote the critical value under the distribution of the sample mean difference under the null or alternate hypothesis (these are non-standard normal distributions). Let these be and respectively.

|

|---|

| Illustration for Power Analysis Derivation |

For objective 1, assuming the null hypothesis and using equation (1) above, we have . Since is a two-tailed probability and we want the critical region on the right-side, let . E.g. implies . Then:

Note that equation (2) above tells us the critical value such that we will reject the null hypothesis if the sample mean of is greater than this value. To satisfy objective 2, we must thus ensure that the probability of rejecting the null hypothesis is at least . In other words, we want . Assuming the alternate hypothesis and again using equation (1), we have . So then:

For the purpose of getting a minimum , we assume . Then using this and squaring both sides with some rearranging gives us:

Which gives us the required minimum sample size equation. If we assume , as is often assumed because we do not know the variance of the treatment, then it simplifies to the following form (as seen in Ron Kohavi's paper).

Bernoulli Events

The equation (3) above for the minimum sample size requires us to know the standard deviation under the null and alternate hypotheses. Usually, the standard deviation under the null is computed from historical data, and it is assumed that . However, if the event we are interested in may be represented as a bernoulli random variable (e.g. an impression is shown and user either clicks or does not click with some probability), the equation may be simplified.

Specifically, the variance of a bernoulli random variable with probability is . Thus, if , then , and likewise for .

So we can use and and substitute these into equation (3). We will then be able to have a minimum sample size formula by just specifying , , baseline conversion and minimum relative difference . This is the formula used by Evan Miller's sample size calculator.

Imbalanced AB Test

Another common scenario is the case where we do not split 50-50, i.e. . In this case, suppose we have , where . For example, if we have a 90-10 split, then p=9. Then we get:

Note that gives the sample size required for , and the total sample size required across both groups is .

Large Language Models

LLMs are generally used in an auto-regressive way, where the user supplies a prompt and the LLM returns a generated response. This framework makes it amenable to a wide range of tasks.

HuggingFace has a great blog post explaining how we can run LLMs on humble hardware. Typically, LLMs have billions of parameters. The following rules of thumb helps us know how much memory we need to load the LLM into memory. For a model with X billion parameters:

- Loading in

float32requires4X GBof VRAM - Loading in

bfloat16requires2X GBof VRAM - Loading in

int8requiresX GBof VRAM

Hence we see that we can load a ~7 billion parameters model with around 14GB of VRAM if loaded in bfloat16, which makes it feasible to run on GPUs like Tesla T4 with 16GB of VRAM. This can be done when loading the model with from_pretrained(..., torch_dtype=torch.bfloat16). Most models are trained in bfloat16 anyway, so it makes sense to load them at that precision.

Current popular open source LLM of that size includes mosaicml/mpt-7b, which can be easily downloaded and used using huggingface.

Quantization

It turns out that we can lower the precision of models even further than 16 bits if we use a quantization method (see e.g. Dettmers 2022, this paper is the basis for the package bitsandbytes used for quantization). The general idea is akin to encoding - we encode each number from a higher precision into a "codeword" in the lower precision (i.e. quantization). Numbers that are close to one another in the higher precision may get mapped to the same "codeword". When we want to use the encoded value, we look up the value in the higher precision that it maps to (i.e. de-quantization).

When applying this to quantizing a neural network, the steps involved are:

- Quantize all model weights to target precision (e.g.

int8) - Pass the input vector at

bfloat16 - At each layer, dequantize the weights and perform matmul in

bfloat16 - Quantize the weights again for storage

Hence while quantization lowers the memory footprint of the model, it may increase inference time. To use quantization, we need to do the following (also make sure bitsandbytes is pip installed). We can also pass load_in_4bit=True for 4bit quantization. More info on quantization usage is available at HuggingFace. An important note is that a GPU is required for quantization, at least in the bitsandbytes package.

model = AutoModelForCausalLM.from_pretrained(..., load_in_8bit=True)

Flash Attention

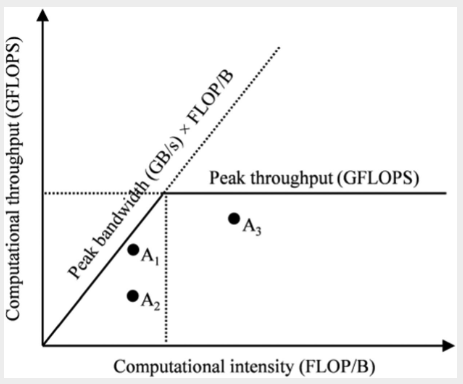

The self-attention mechanism (Dao 2022) is at the heart of the transformer performance but is also a major bottleneck in terms of memory and computational cost. One of the most successful optimizations for the attention mechanism is the Flash Attention paper.

Suppose we have an input sequence of embeddings where , such that . The transformer stores parameters , such that such that . The self-attention matrix is then computed to represent the pairwise interaction between tokens at position ( row) and position ( column). The row-wise softmax is taken to convert these into probabilities and finally the output is .

Typically, is much larger than the hidden dimensions , as can be 2,048 or larger. Hence the matrix is the bottleneck for memory and computation. The flash attention proposes to do this computation in a block-wise manner to reduce the memory usage. Furthermore, the algorithm also speeds up the computation compared to naive attention because the block-wise implementation minimizes the number of read-write operations between the faster SRAM and slower HBM.

More details can be found in the notebook at Dao 2022 - FlashAttention. We can utilize flash attention like so:

%pip install optimum

model.to_bettertransformer()

Note that this is only supported for models that have implemented flash attention, e.g. gpt-neox, bloom etc.

Flash Attention is now support natively within Pytorch as torch.nn.functional.scaled_dot_product_attention (see blog). The usage is like below. We need transformers>=4.36 and torch>=2.1.1 to use it.

from optimum.bettertransformer import BetterTransformer

from transformers import AutoModelForCausalLM

model = AutoModelForCausalLM.from_pretrained("gpt2-large", torch_dtype=torch.float16)

model = BetterTransformer.transform(model, keep_original_model=False)

Position Representation

Recent innovations in position encoding has led to accuracy improvements for long input sequences. The initial attention papers used absolute position embeddings. Given an input sequence of embeddings , absolute position embeddings are generated by the model. These position embeddings are added to the input sequence , thereby allowing to model to use these position cues.