Bateni 2017 - Affinity Clustering

Bateni 2017 - Affinity Clustering: Hierarchical Clustering at Scale

This paper proposes a hierarchical clustering algorithm which they call "affinity clustering". It is essentially Boruvka's algorithm but with slight modifications, and the main contribution of the paper is a distributed algorithm to perform such clustering at scale.

Much of this paper is devoted to theoretical analysis, but my interest here is just in implementing and understanding why the distributed algorithm is correct.

Naive Algorithm

Suppose we have an undirected graph , where is the set of nodes and is the set of undirected edges. The naive algorithm for affinity clustering (closely following Boruvka's algorithm) is as follows:

- Start with every node in its own cluster , where .

- In each round, find the lowest weighted outgoing edge from each cluster and add the edge

- i.e. for each cluster , find

- Note that this step ensures that the number of clusters at least halves, since each cluster is connected to another cluster

- If the number of clusters becomes lower than , we undo the most recent edge added until we get clusters

- At the end of each round, we have the desired number of clusters

- The next round then commences with these obtained clusters

Note that this is essentially Boruvka's algorithm (minus the undo steps), which is guaranteed to find the Minimum Spanning Tree (MST) of the graph . This implies that if we start with , we can run the naive algorithm on the MST and get exactly the same clusters as running it on , because only the edges in come into play when running affinity clustering on . Since the number of nodes in the is (by definition of an MST), we would be able to do this efficiently on a single machine (unless |V| is much larger than a few millions).

Therefore, we just need a distributed algorithm to find the MST of efficiently, and we can perform affinity clustering efficiently.

Efficient MST algorithm

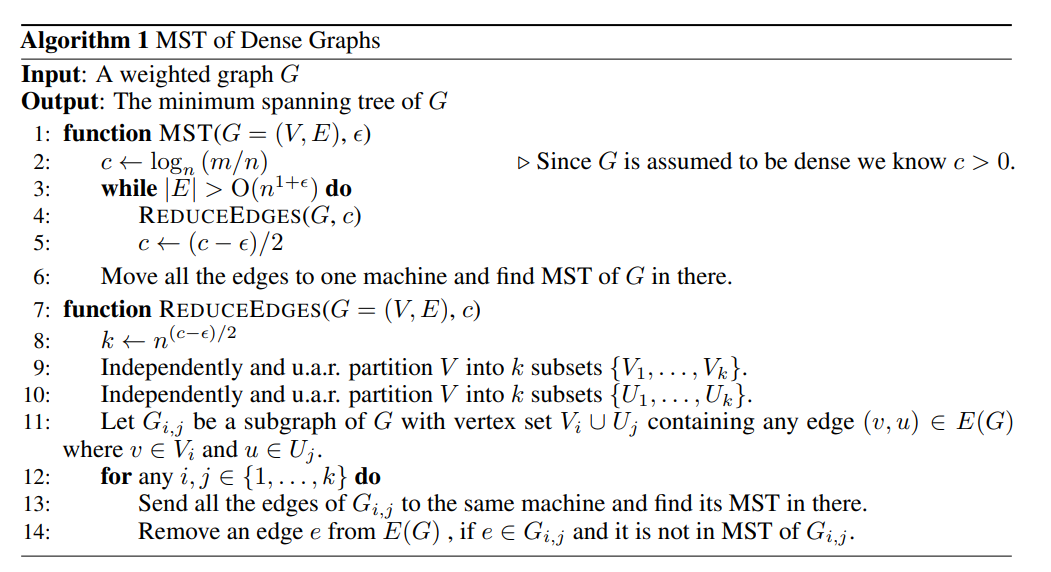

The main algorithm is the distributed MST algorithm (see below). Some notations:

- is the number of edges , is the number of nodes

- measures the average density of the graph, i.e. the average number of edges per node. is taken such that , where implies that and implies that is a fully connected graph.

- is a density parameter controlling the final number of edges remaining before we run MST on a single machine. We can probably set it to or something like that. A higher implies that we can run ReduceEdges for less steps, but we need more memory to run the final round of MST.

|

|---|

| Distributed MST Algorithm |

The algorithm is really quite simple. The main idea is that we can independently process each subgraph of comprising a random pair of vertex sets in a distributed fashion. Each worker finds the MST of the subgraph assigned to it, and any edge that is in but not in may be removed from the global edge set . In this way, we can whittle down the number of edges in until it is a small set that can fit in memory (i.e. in the order of which is not much larger than the number of nodes). Then we can just run MST one final time on the master node.

So the algorithm really hinges on Lemma 6 in the paper, which tells us that removing edges in this distributed way will still give us the correct MST at the end.

My proof by contradiction. Suppose an edge exists between nodes . Let denote a subgraph containing nodes , and suppose for contradiction that but .

First cut by removing the edge such that are in different partitions (each partition is a set of nodes). Observe that since exists in , it must be the lowest weight edge connecting and , since otherwise we could have replaced with a lower weight edge to complete the MST.

Now consider the subgraph and partition the nodes in according to to form and . Consider the MST of (might be a Minimum Spanning Forest instead, if not all nodes can be connected), and observe that there must exist a path between and in . Now remove any edges that cross and (call this edge set ). Then add back all the edges in that do not connect a path between nodes and .

Now there must exist exactly one edge remaining in that connects a path from to , since (i) a path exists between and in and (ii) there cannot be more than one edge that does so, otherwise there would have been a cycle in . We also know that this edge is not .

But is the minimum weight edge between and , and therefore it must also be the minimum weight edge between and . Hence this other edge could not have been in the MST of . We reach a contradiction.

Thus we are justified in removing edges in this way. This lemma is great because it allows us to independently process each subgraph, and if memory is of concern, we can also batch the number of subgraphs processed at each step according to the number of workers we have.

Lastly, note that is a dynamic parameter in the algorithm that measures the density (i.e. number of edges relative to number of nodes) of the graph at each step. Since we reduce the density of the graph in every step, is stepped down progressively. This also results in being stepped down progressively, where controls the number of subgraphs at each step. We start with a large , which requires less memory since small subgraphs implies that many edges are "chopped off". As the graph becomes less dense, we can afford to lower progressively and remove more edges.

Implementation

I can't seem to find an implementation of this algorithm, but it is probably easy to write a naive version of it using multiprocessing in python. We could store the edges in a sqlite database and distribute a batch of subgraphs at each turn, collect the edges to remove in the master and remove them from the db, and repeat.